Introduction

In this article, we will show how to set up a CI/CD pipeline to deploy a containerized ASP.NET Core (5.0) Web API application into an OpenShift Kubernetes cluster using Azure DevOps.

The following image will walk you through all the steps explained in this article.

What is a CI/CD pipeline?

A CI/CD pipeline is a series of steps that must be performed in order to deliver a new version of software. Continuous integration/continuous delivery (CI/CD) pipelines is one of the best practices for DevOps teams to implement, for delivering code changes more frequently and reliably.

Continuous Integration

Continuous Integration (CI) is the process of automating the build and testing of code every time a team member commits changes to version control.

Continuous Delivery

Continuous delivery is an extension of continuous integration since it automatically deploys all code changes to a testing and/or production environment after the build stage.

Prerequisites & Setup

- Azure DevOps account: We will use an Azure DevOps project for a Git repo and build/release pipelines. Create account and a new project here. Make sure you have Project Admin rights to the Azure DevOps account.

- RedHat OpenShift Cluster: OpenShift Container Platform — platform as a service built around docker containers orchestrated and managed by Kubernetes on a foundation of Red Hat Enterprise Linux. In this article we are using OpenShift cluster provision on AWS. You can read my pervious article how to setup OpenShift cluster on AWS (

Let’s start!!!, We’ll then dive into the detail of how we can setup CI/CD pipeline.

Step 01 : Create Application & Push to the GitHub

In this step we need to create or login into the Github ,then create a public repository. After that we need to create a simple .Net Core Web API application using visual studio 2019 and push the source code in to the GitHub repository.

Source Code

You can download the source code from my GitHub profile.

Step 02 : Create Continuous Integration (CI) pipeline

1. You also need a Microsoft Azure DevOps account. Once you log into this account, you should see a list of your organizations on the left, and all projects related to your organization on the right. If you do not have any projects, it is time to add a new one.

2. Create pipeline ,Navigate to the Pipelines tab in the left side panel and click the create button to create new pipeline.

3. Select GitHub option then select project that we created previous step 1.

4. Select Starter pipeline option. Starter pipeline start with a minimal pipeline that you can customize to build and deploy your code.

5. It will create basic YAML pipeline and rename the pipeline name.

What’s a Classic UI pipeline and what’s a YAML pipeline?

Classic UI you can build a pipeline by using a GUI editor, that is the original way Azure DevOps pipelines are created. YAML is the newer way where you can define the pipeline as code, in YAML format, which you then commit to a repo. Azure DevOps does have an assistant to help you create/modify the YAML pipelines, so you don’t have to remember every possible setting or keep having to look up references. For a new project with a modern team, It's better to go with YAML.

6. Exposing openshift image registry.

OpenShift Container Platform, the registry is not exposed outside of the cluster at the time of installation.

Login to the Openshift cluster using openshift CLI tool. oc login -u <cluster-admin-username> -p <password>

To expose the registry using DefaultRoute ,Set DefaultRoute to True oc patch configs.imageregistry.operator.openshift.io/cluster --patch '{"spec":{"defaultRoute":true}}' --type=merge

Get the Host path of the image registry HOST=$(oc get route default-route -n openshift-image-registry --template='{{ .spec.host }}')

echo $HOST

Grant the permission to the user to edit & view registry oc policy add-role-to-user registry-viewer <user_name>

oc policy add-role-to-user registry-editor <user_name>

Generate the TLS Certificate oc extract secret/router-ca --keys=tls.crt -n openshift-ingress-operator

Your private key must not be publicly visible. Run the following command so that only the root user can read the file chmod 400 yourPublicKeyFile.pem

chmod 400 openshift_key.pem

To copy files between your computer and your instance you can use an FTP service like FileZilla or the command scp. “scp” means “secure copy”, which can copy files between computers on a network. You can use this tool in a Terminal on a Unix/Linux/Mac system.

scp -i /directory/to/abc.pem user@ec2-xx-xx-xxx-xxx.compute-1.amazonaws.com:path/to/file /your/local/directory/files/to/download

scp -iopenshift_key.pem ubuntu@ec2-3-23-104-65.us-east-2.compute.amazonaws.com:/home/ubuntu/tls.crt tls

7. Create openshift project and Image stream.

Create two projects using OpenShift UI or run the following command.

oc create project darshana-dev

oc create project darshana-uat

Create ImageStream each project (DEV, UAT).

An imagestream and its associated tags provide an abstraction for referencing container images from within OpenShift Container Platform. The imagestream and its tags allow you to see what images are available and ensure that you are using the specific image you need even if the image in the repository changes (Link).

8. Create new service connection for Openshift image registry in Azure-devops.

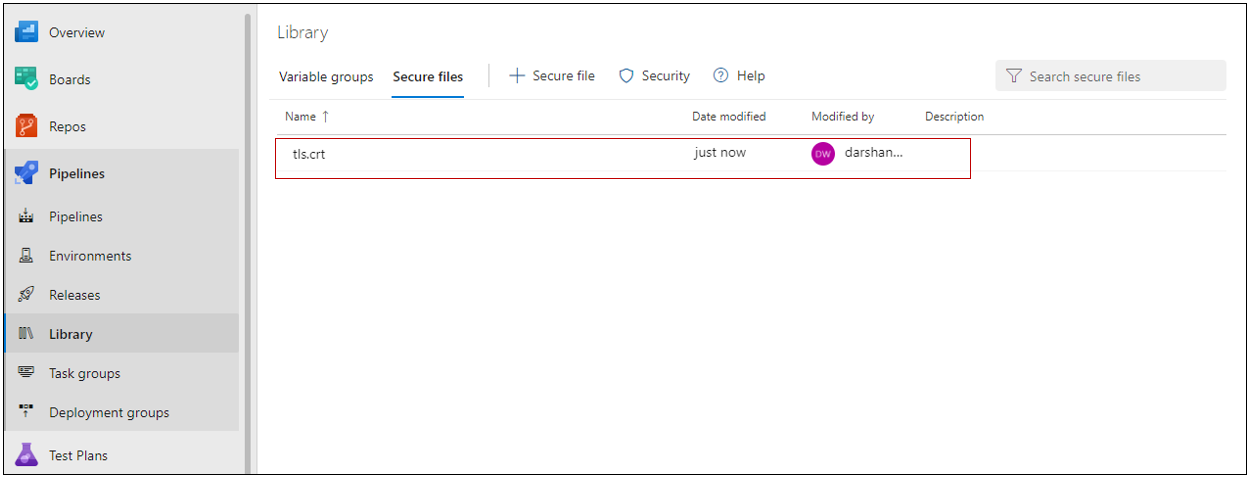

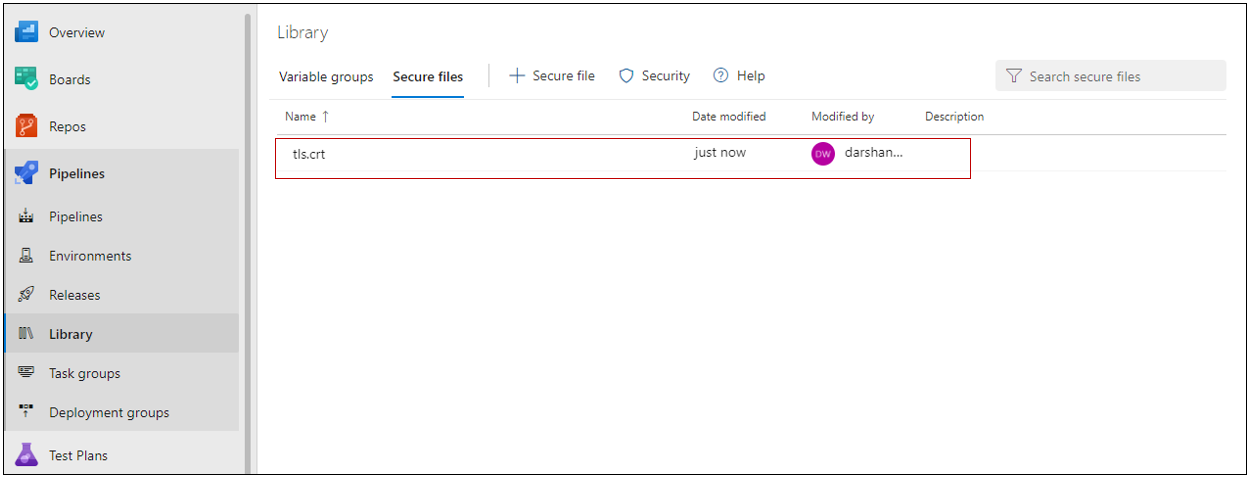

9. Upload TSL certificate as a secure file in Azure-devops.

Navigate to the AzureDevOps Library , press the (+) button to upload the TLS Certificate that we download in the pervious step.

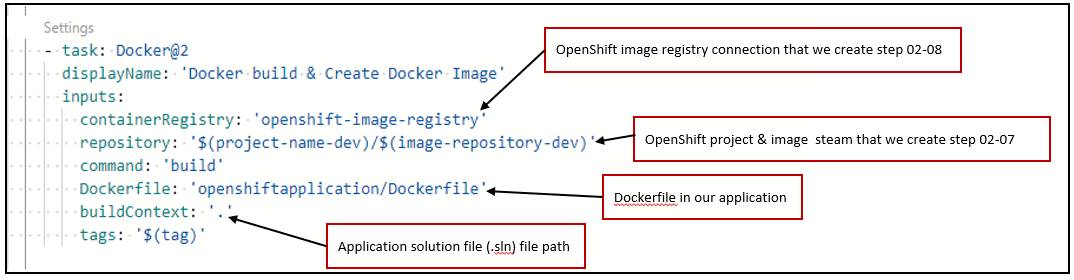

DownloadSecureFile Use this task in a pipeline to download a secure file to the agent machine.10. Build a Docker Image

Here we need to build an docker image base on the Dockerfile in our repository. Once we build an image, you can then push it to openshift image Registry (Step 04). (You can use any other image registry like Azure Container Registry, Docker Hub, and Google Container registry)

Step 03 : Unit testing & Code coverage

Unit Testing , also known as Component testing, is a level of software testing where individual units / components of a software are tested. The purpose is to validate that each unit of the software performs as designed.

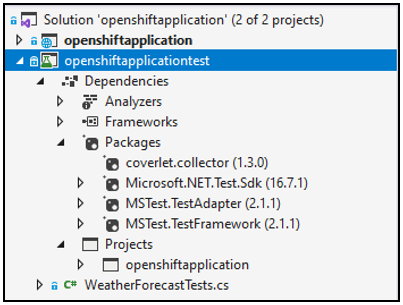

1.) Create a unit test projectUnit tests provide automated software testing during your development and publishing. MSTest is one of three test frameworks you can choose from. The others are xUnit and nUnit. You can create unit test project using visual studio use this ( link ) and create a test project in the same solution. Here also you can download source code from my git repository I add very basic single test method.

The test project must reference Microsoft.NET.Test.SDK version 15.8.0 or higher and other MSTest nuget packages.

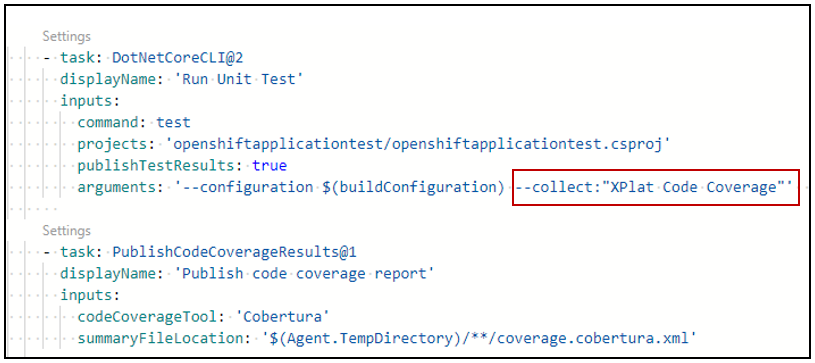

2.) Add unit test task

Add the following snippet to your azure-pipelines.yml file:

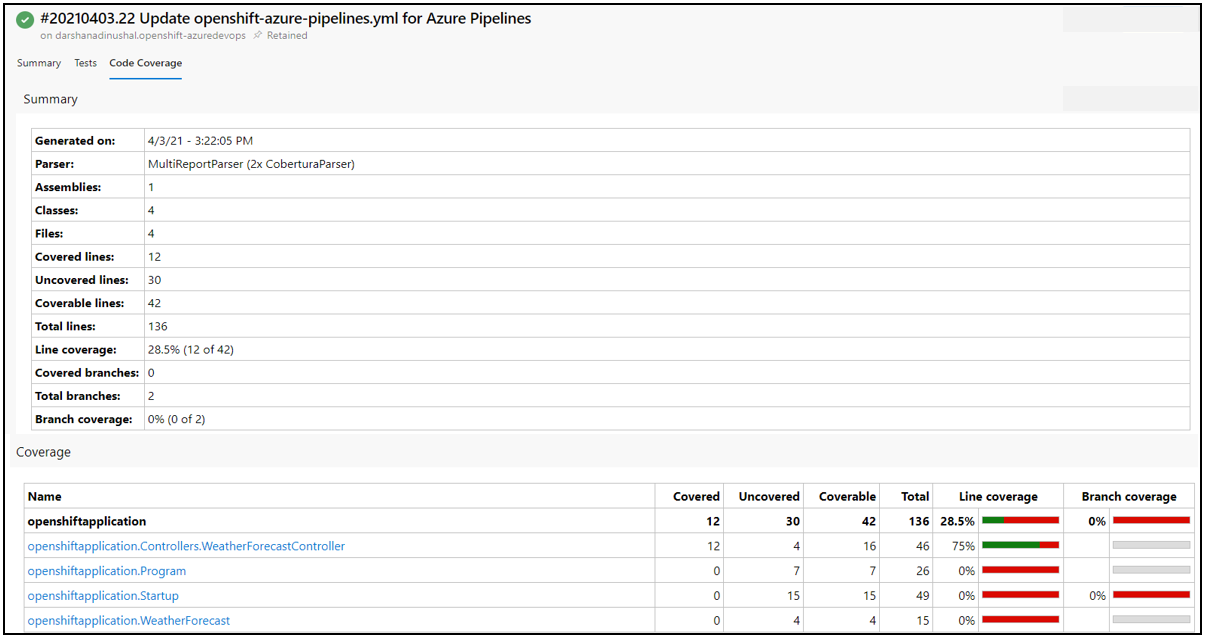

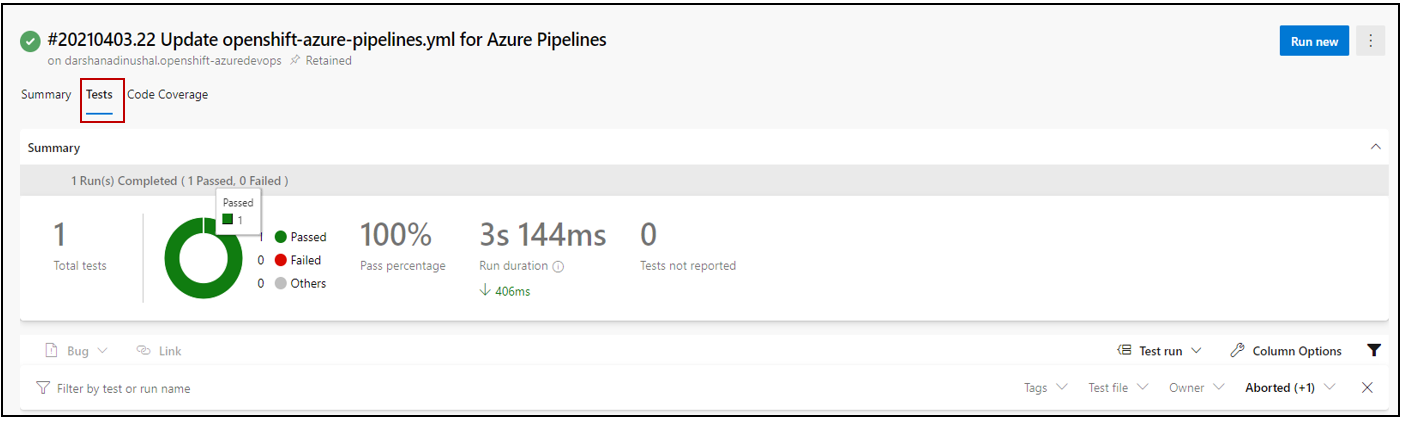

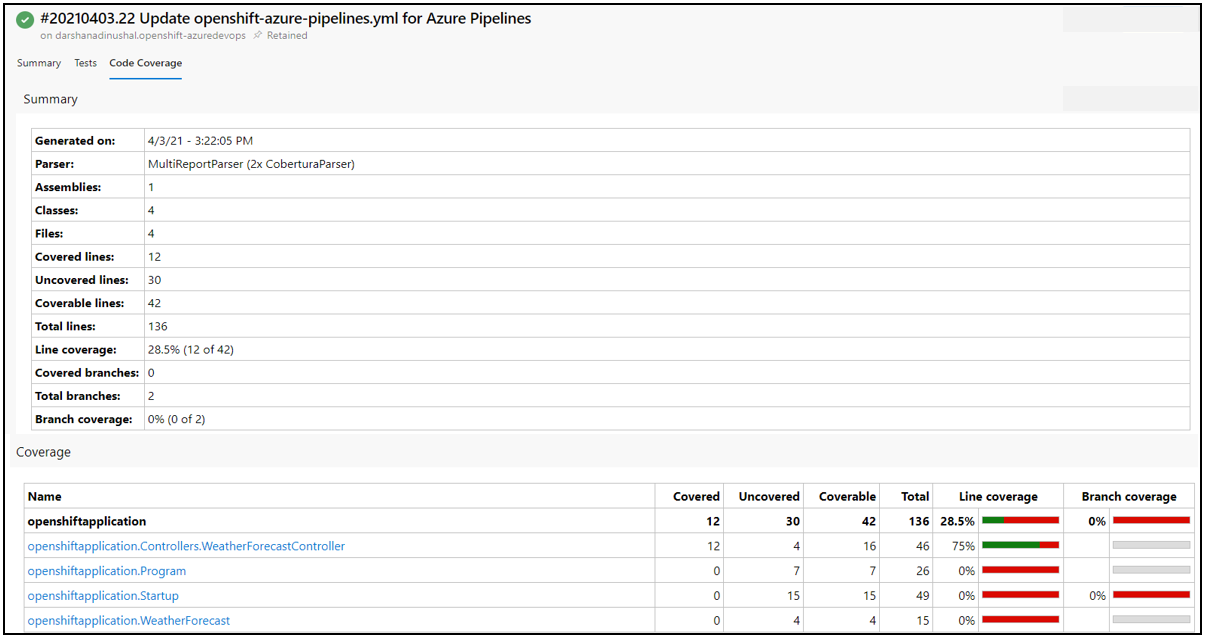

3.) Run your unit test Run the pipeline and see the test result.Unit tests help to ensure functionality, and provide a means of verification for refactoring efforts. Code coverage is a measurement of the amount of code that is run by unit tests - either lines, branches, or methods.

Add the following snippet to your azure-pipelines.yml file:

Run the pipeline and see the code coverage result.

Step 04 : Push Docker Image

The following YAML snippet we use with a Docker task to login and push the image to the OpenShift image container registry.

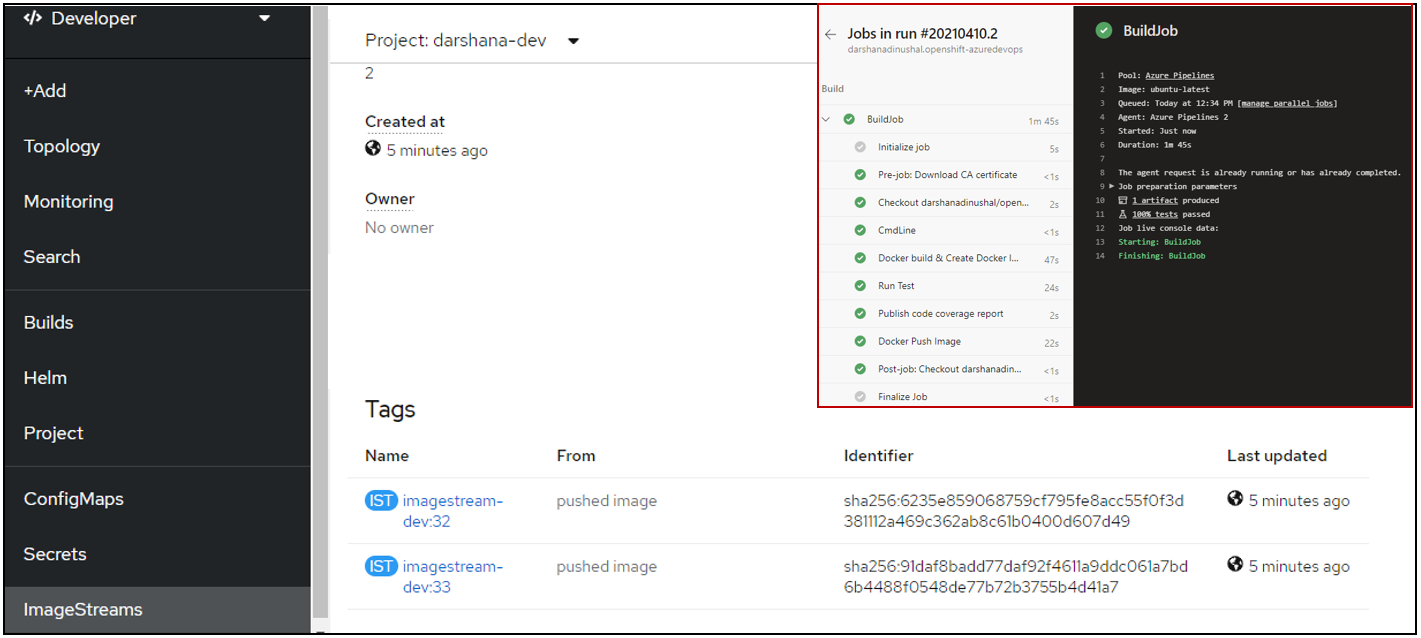

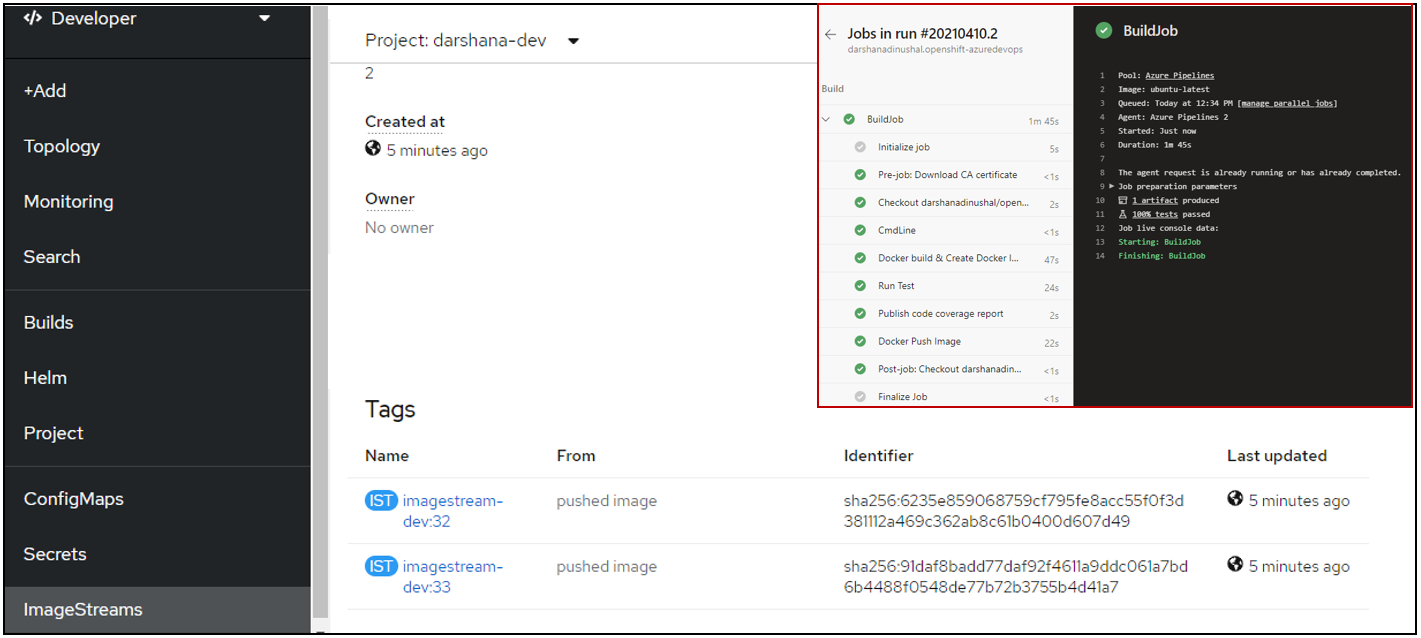

Run the pipeline and see the docker image upload successfully to image registry.

Navigate to the image stream and see the images.

Step 05 : Continuous Delivery (Deploy to DEV )

In this step we are going to deploy docker image that we create in the previous step to the OpenShift deployment.

1.) Install Red Hat OpenShift deployment extension for Azure Devops.

The OpenShift VSTS extension can be downloaded directly from the marketplace at this link. Now, you need to configure the OpenShift service connection, which connects Microsoft Azure DevOps to your OpenShift cluster.

- Log into your Azure DevOps project.

- Click on Project Settings on the page’s bottom left.

- Select Service Connections.

- Click on New service connection and search for OpenShift.

- Pick the authentication method you would like to use (basic, token, or kubeconfig). See the details for each option in the next few sections.

- Insert your own OpenShift cluster data.

Once the extension can authenticate to the Red Hat OpenShift cluster, you are ready perform operations in OpenShift by executing oc commands directly from Azure DevOps.

3.) Create deployment config to deploy docker Image to the DEV project

In this step we use Openshift Developer perspective to create .net core application and build that application on the openshift.

The Developer perspective in the web console provides you the following options from the +Add view to create applications and associated services and deploy them on OpenShift Container Platform (

Link)

Navigate to Developer mode then Topology, chose Container Image option ,then configure following way.

You can download my deployment config file and other files from my git repository

(Link).

Run and Test your application.

4.) Add DEV Stage in to the Pipeline.

Run the pipeline and see the new deployment successfully deployed to DEV project.

Step 06 : Continuous Delivery (Deploy to UAT )

1.) Tag dev image and push to the UAT image stream.

In this step we are tagging dev image and push that image into the UAT image stream.

Run the pipeline and see the docker image push to the UAT project image stream.

Run the pipeline and see the docker image push to the UAT project image stream.

2.) Create deployment config to deploy docker Image to the UAT project

Same as we did in step 05-03 ,we are creating new application for UAT. After create application run and verified it's running correctly.

3.) Update azure devops pipeline with deployment.

Run the pipeline and see the new deployment successfully deployed to UAT project.

Summary

Congratulation !!! Now you are ready to use the Azure DevoOps pipeline ,Once the developer commit the code changes to the branch and merge to master branch ,it will automatically trigger the pipeline deploy new version of the application to dev and UAT.

Comments

Post a Comment